Intel, at its Innovation 2023 occasion, introduced the 14th Gen Meteor Lake processor lineup that comes with all kinds of enhancements and new options. Whereas the Intel 4 course of, a disaggregated structure, and Intel Arc built-in graphics are thrilling upgrades, the customers will recognize the AI options that Meteor Lake chips come built-in with. Intel introduced it’s bringing synthetic intelligence to trendy PCs via an all-new neural processing unit (NPU) in 14th-Gen Meteor Lake processors. However what’s the NPU, and the way will it assist? Let’s speak about it beneath.

Intel Brings NPU to Shopper Chips for the First Time

Whereas synthetic intelligence is broadly used on-line, being on the cloud has limitations. Probably the most outstanding of them embrace excessive latency and connectivity points. A Neural Processing Unit (or NPU) is a unit devoted to the CPU for processing AI duties. So, as an alternative of counting on the cloud, all AI-related processing is completed on the gadget itself. Intel’s new NPU will have the ability to do precisely that.

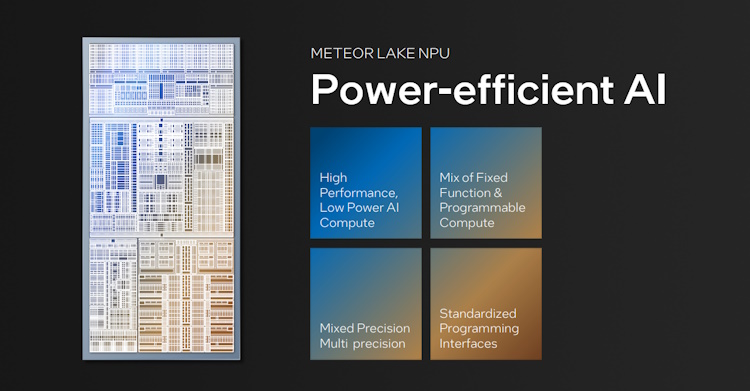

Whereas Intel has been experimenting with AI for some time, this would be the first time the corporate will convey an NPU to the client-sided silicon. The NPU on Meteor Lake chips is a devoted low-power AI engine that may deal with sustained AI workloads each offline & on-line.

This implies as an alternative of counting on AI packages to do the heavy lifting from the web; it is possible for you to to make use of the Intel NPU to do the identical job corresponding to AI picture modifying, audio separation, and extra. Having an on-device NPU for AI processing and inference will undoubtedly have loads of benefits.

Since there might be no latency, customers can anticipate lightning-fast processing and output. Moreover, Intel’s NPU will assist enhance privateness and safety with the chip’s safety protocols. It’s secure to say it is possible for you to to make use of AI extra interchangeably, and that too every day.

Intel NPU Can Sustainably Interchange Workloads

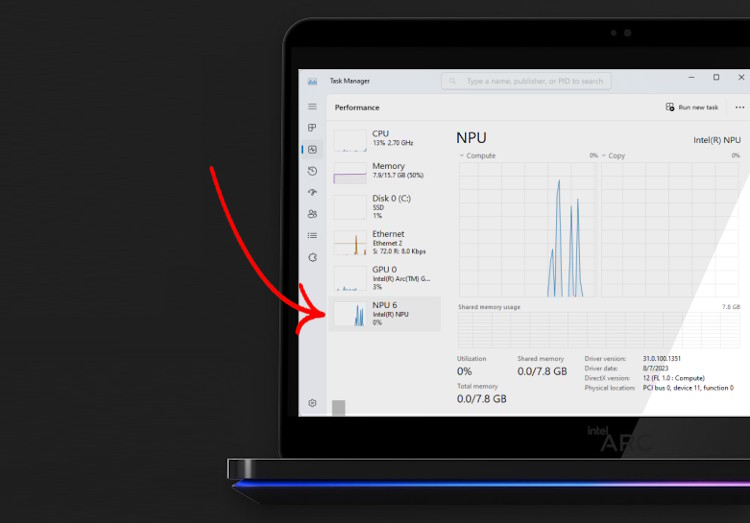

The Intel NPU has been divided into two most important parts that every do their job. These are Machine Administration and Compute Administration. The previous helps a brand new driver mannequin for Microsoft known as the Microsoft Compute Driver Mannequin (MCDM). That is very important to facilitate AI processing on the gadget. Moreover, due to this driver mannequin, customers will have the ability to see the Intel NPU within the Home windows Job Supervisor together with the CPU and GPU.

Intel has been engaged on the aforementioned driver mannequin with Microsoft for the final six years. This has enabled the corporate to make sure that the NPU effectively works via duties whereas dynamically managing energy. This implies the NPU can shortly change to a low-power state to maintain these workloads and again once more.

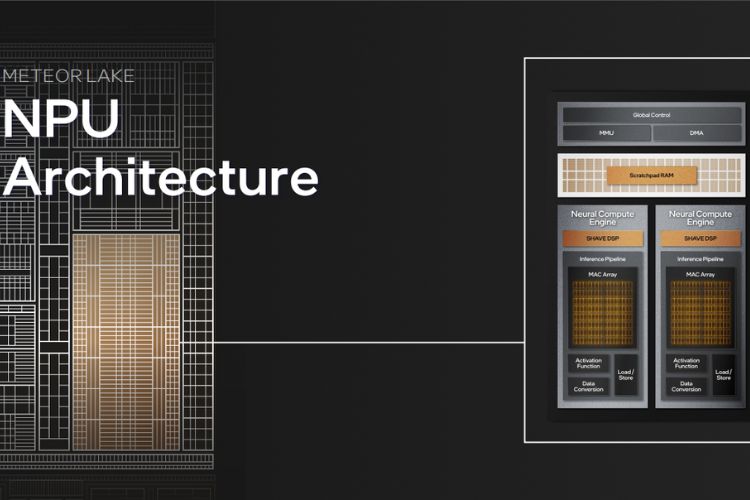

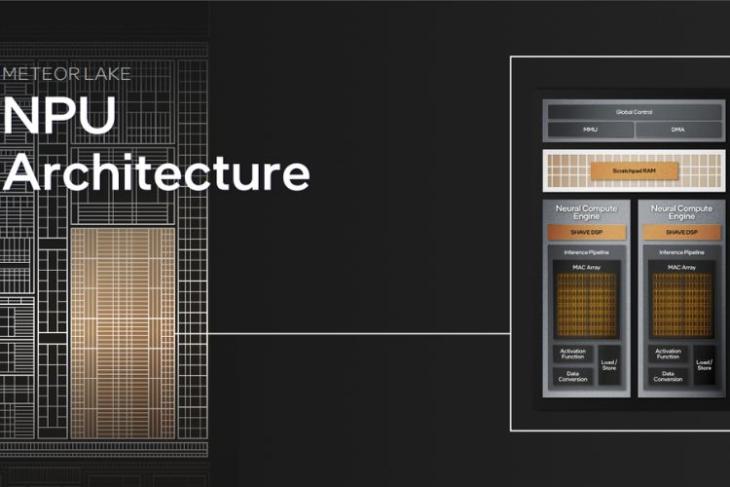

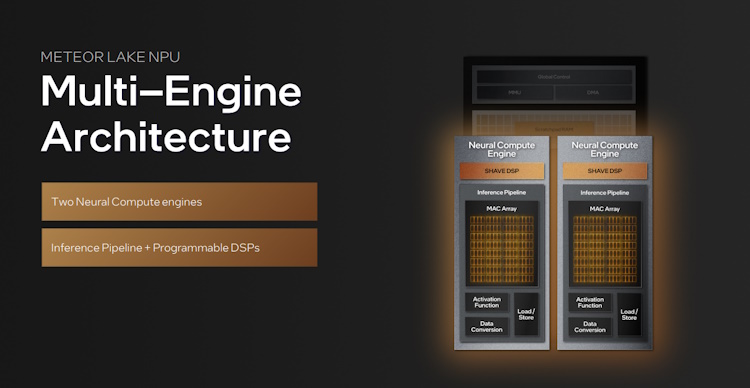

The Intel Meteor Lake NPU employs a multi-engine structure. Constructed inside it are two neural compute engines. These can work on two completely different workloads or collectively on the identical one. Throughout the neural engine lies the Inference Pipeline, which acts as a core element and can assist the NPU perform.

Intel’s NPU Makes On-Machine AI Attainable

Whereas the precise workings of the NPU go far deeper, customers can anticipate loads of firsts with the brand new Meteor Lake structure. With on-device AI processing coming to Intel’s shopper chips, it will likely be thrilling to see their vary of functions. Additionally, the presence of the Intel 14th Gen Meteor Lake’s NPU within the Job Supervisor, whereas sounding easy, signifies that buyers will have the ability to capitalize on it. Nevertheless, we must await the rollout to see how far we are able to push it.

Featured Picture Courtesy: Intel