Simply after the launch of Gemini 1.0 Extremely with the Bard rebrand final week, Google is again with a brand new mannequin to compete with GPT-4. That is the Gemini 1.5 Professional mannequin, the successor to Gemini 1.0 Professional that presently powers the free model of Gemini (previously Bard).

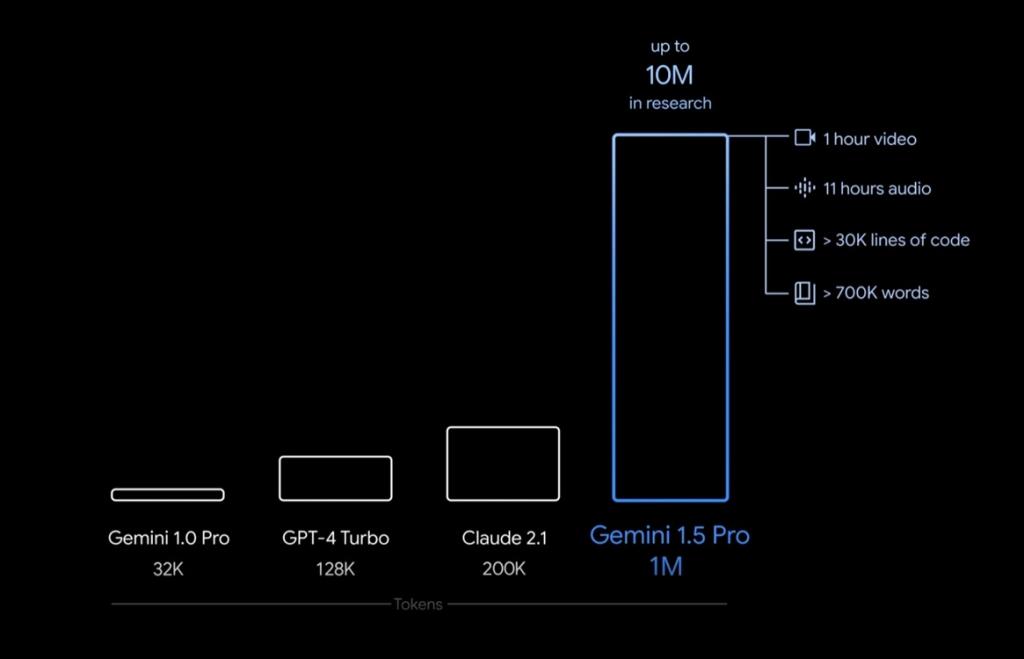

Whereas the household of Gemini 1.0 fashions has a context window of as much as 32K tokens, the 1.5 Professional mannequin will increase the usual context size as much as 128K tokens. Not simply that, it helps an enormous context window of as much as 1 million tokens, a lot increased than GPT-4 Turbo’s 128K and Claude 2.1’s 200K tokens.

Gemini 1.5 Professional Constructed on Combination-of-Specialists (MoE) Structure

Google says the Gemini 1.5 Professional is a mid-size mannequin, however it performs practically the identical because the Gemini 1.0 Extremely whereas utilizing much less compute. It’s made doable as a result of the 1.5 Professional mannequin is constructed on the Combination-of-Specialists (MoE) structure, just like OpenAI’s GPT-4 mannequin. That is the primary time Google has launched an MoE mannequin, rather than a single dense mannequin.

In case you’re unfamiliar with the idea of MoE structure, it consists of a number of smaller skilled fashions which are activated relying on the duty at hand. Using specialised fashions for particular duties delivers higher and extra environment friendly outcomes.

Coming to the big context window of Gemini 1.5 Professional, it might probably ingest huge quantities of knowledge in a single go. Google says the 1 million context size can course of 700,000 phrases, or 1 hour of video, or 11 hours of audio, or codebases with over 30,000 strains of code.

To check Gemini 1.5 Professional’s retrieval functionality, on condition that it has such a big context window, Google carried out the Needle In A Haystack problem, and in keeping with the corporate, it recalled the needle (textual content assertion) 99% of the time.

In our comparability between Gemini 1.0 Extremely and GPT-4, we did the identical check, however Gemini 1.0 Extremely merely didn’t retrieve the assertion. We will certainly check the brand new Gemini 1.5 Professional mannequin and can share the outcomes.

To be clear, the 1.5 Professional mannequin is presently in preview and solely builders and clients can check the brand new mannequin utilizing AI Studio and Vertex AI. You possibly can click on on the hyperlink to hitch the waitlist. Entry to the mannequin will probably be free throughout the testing interval.